Following on from my previous post AWS TIPS AND TRICKS: Automatically create a cron job at Instance creation I mentioned I was uploading files from S3 using the AWS cli tools S3 sync command and I thought I would share how.

The concept

When launching an EC2 instance I needed to upload some files; specifically a python script, a file containing a cron schedule, and a shell script to run after the copy.

How?

I decided on the AWS cli tool S3 sync command to achieve this, given the AWS cli tools are already installed on the AWS Linux AMIs. The S3 sync command itself is really easy to use, simple understand, and as always AWS have great documentation on this.

The command I used was pretty basic

aws s3 sync s3://s3-bucket-name/folder /home/ec2-user

Essentially the command copies all the files in the s3-bucket-name/folder to the /home/ec2-user folder on the EC2 Instance.

The actual command is simple but there are a few things you need to do to enable it to work, the most important are granting or allowing the EC2 access to the S3 bucket.

Allowing access to the S3 bucket

The easy way (but in my view the not recommended way) is to configure your cli tools on the EC2 to use an IAM account (that has access to the bucket) and private key, or you can use the role based approach.

I don’t like the easy approach as a permanent solution because it means you have to hard code your account and private key somewhere; Forgetting the security risk(s) this could create, if you rotate your keys you’ll need to remember to change it where ever it is used and you should rotate often.

I went for the ‘assume role’ approach, this is where the EC2 instance assumes a role and is allowed to perform certain functions that the role is configured for. So how do you do this?

First, create IAM policy to enable the reading of objects in S3 bucket

To create the policy, navigate to policies on the IAM Dashboard and select create policy. You can either copy an AWS managed policy, manually enter text, or point and click to create the policy. I decided to create my own using point and click (Policy Generator) to create a policy that looked like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1467685105000",

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::< s3-bucket-name >"

]

},

{

"Sid": "Stmt1467685155000",

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::< s3-bucket-name >/<Folder>/*"

]

}

]

}

For this example, I only need read access for the objects. The policy is separated into two parts because the ListBucket action requires permissions on the bucket while the GetObject action requires permission on the objects in the bucket.

Next, Create Role to enable an EC2 instance to access the s3 bucket

Navigate to the Roles section of the IAM Dashboard and select create a new Role. Follow the wizard and add the policy created above.

Finally, Add the Role to EC2 Instance

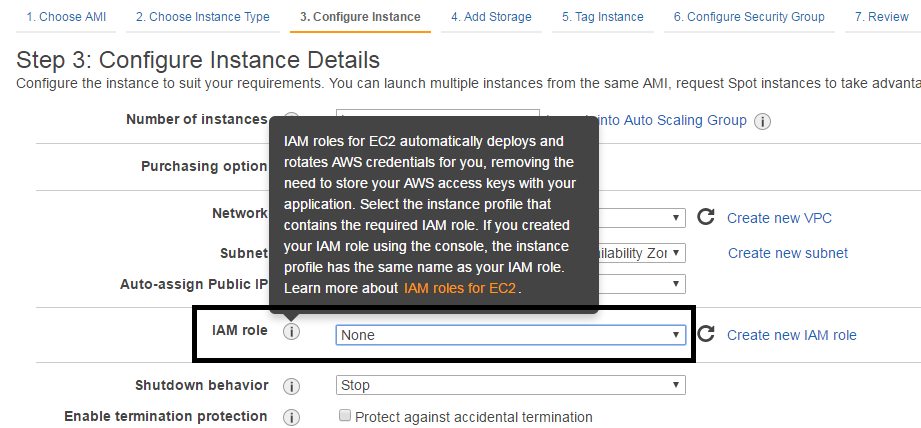

An EC2 instance can only be assigned a Role at creation and you can’t change it. Navigate to the EC2 Dashboard and select Launch Instance. Follow the wizard and when on “step 3. Configure instance details” select the role you created for the IAM role option.

To do what I needed to achieve – Add User data to run the s3 command

Note: This step can be skipped if you want to just log onto the server and run the command

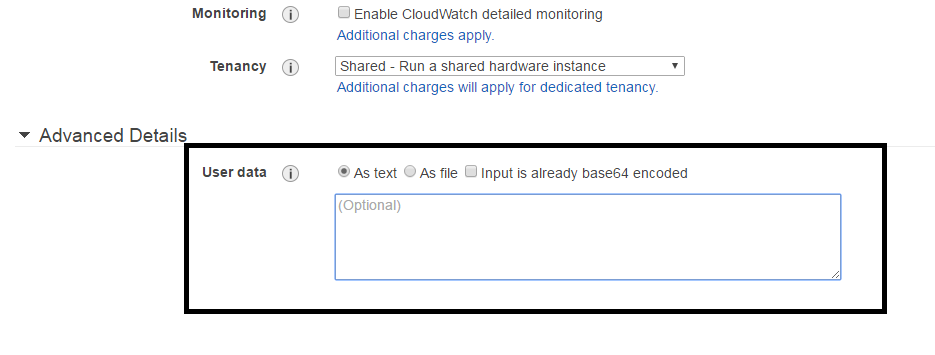

At the bottom of the “step 3. Configure instance details” expand the Advanced Details section.

Add the following to the User data field

#!/bin/bash

aws s3 sync s3://optimal-aws-nz-play-config/ec2-operator /home/ec2-user

Continue through the wizard

That’s it, all going well once you have logged onto the instance you should be able to see the files that were sync from your s3 bucket.

Barry, Preventer of Chaos